AI-Powered Student Request Routing

E-Wolf Support is an AI-augmented request-routing platform designed to serve FRCC’s 30,000+ students with faster, more accurate support. After analyzing the failures of the previous AI chatbot—which frequently surfaced incorrect deadlines and policy information—I designed a human-in-the-loop model where AI classifies inquiries and drafts responses while staff verify all outbound communication.

This workflow maintains 100% accuracy for institutional information, reduces manual sorting time by 30–40%, and saves staff an estimated 5–6 hours per week that were previously spent triaging misrouted requests. For students, it delivers clearer routing and more trustworthy guidance; for FRCC, it reduces operational overhead, improves compliance, and creates a scalable foundation for future AI-enabled services.

A Stanford-University–Led Research Project, Funded by FRCC

Timeline

July'25 - Dec'25

Role

Product Designer

Led end-to-end product strategy, research, IA, interaction design, prototyping, and testing—while also designing and configuring the LLM logic that powers the system’s AI-assisted classification, drafting, and human-in-the-loop workflows.

The Challenge

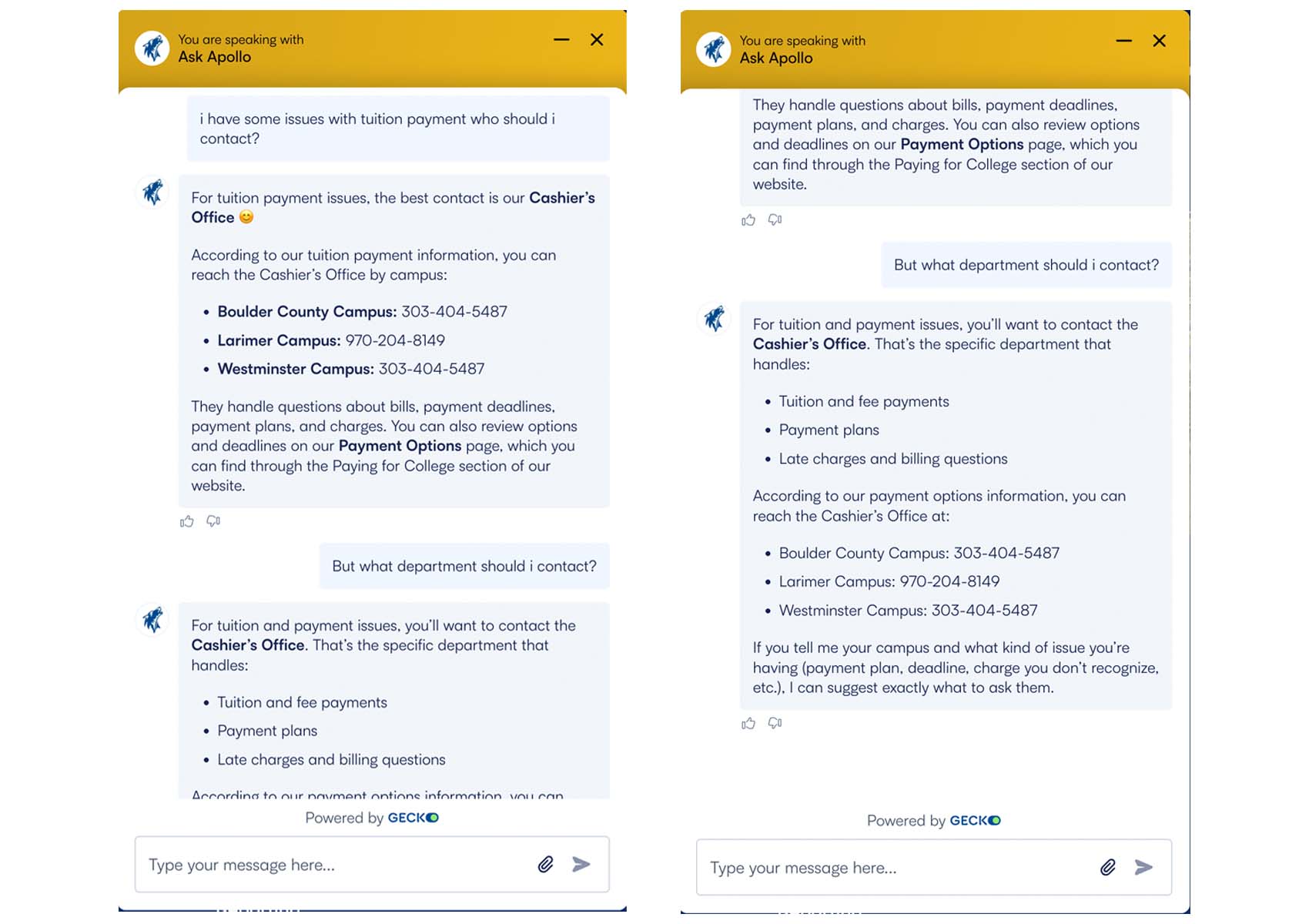

Legacy chatbot responses were repetitive and unreliable for sensitive topics like tuition—highlighting the need for a human-verified support system.

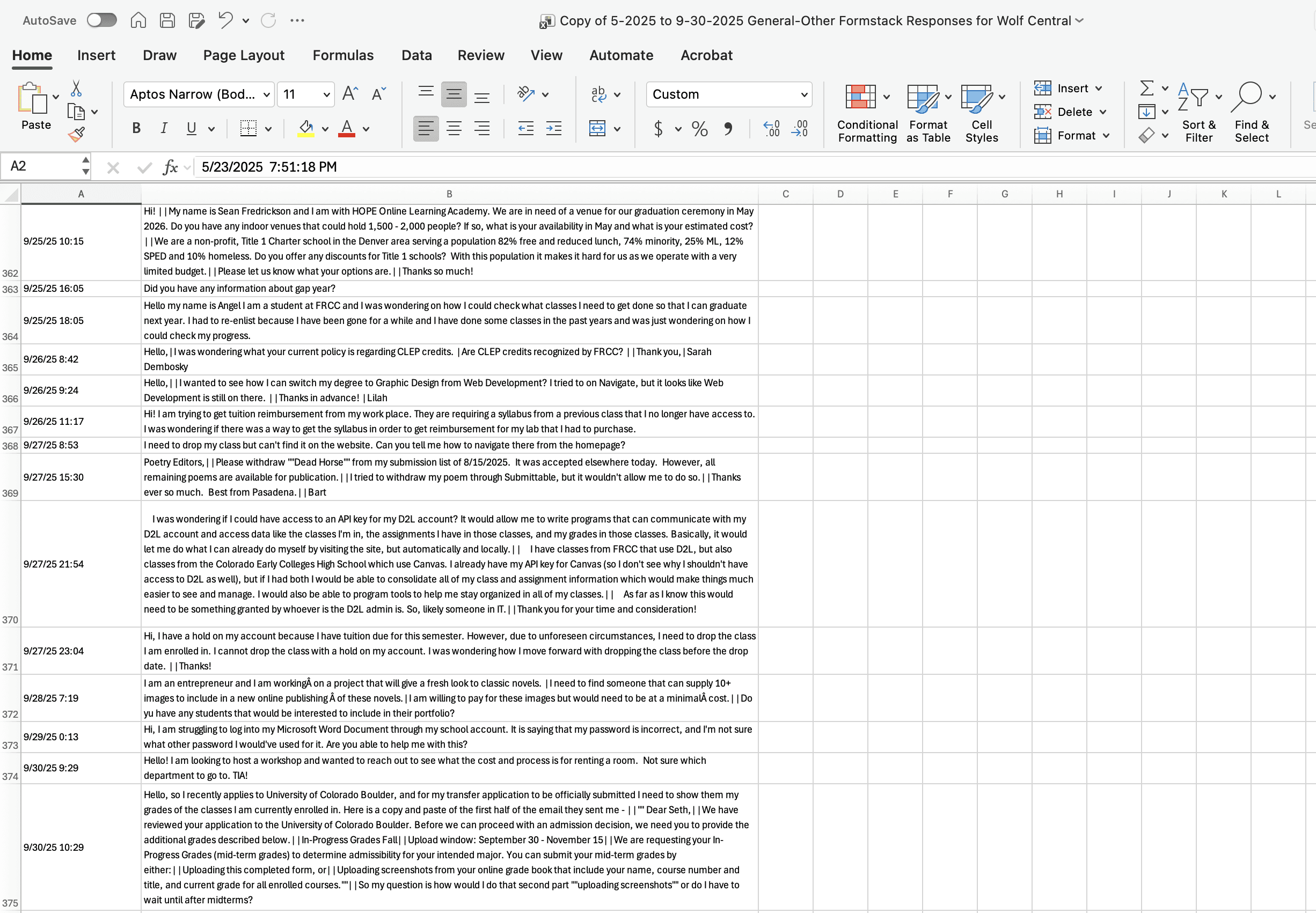

A review of 1,000+ student support submissions revealed frequent misrouting, unclear categories, and heavy manual triage. This dataset became the core training source for E-Wolf’s AI-assisted routing model.

Research & Discovery

Chatbot Evaluation

Tested FRCC's existing AI chatbot to document accuracy issues with critical information.

Data Analysis

Analyzed Formstack submission data to identify patterns and common request type.

Workflow Mapping

Mapped current support workflows to identify inefficiencies

User Interviews

Conducted interviews with E-Wolf staff and students about pain points

Key Findings

Staff Perspective

Need to maintain oversight over AI-generated responses

Want AI to handle routine categorization while they focus on complex cases

Require ability to refine and personalize AI suggestions

Early Design Work

The solution must create a collaborative relationship between AI and humans, where AI handles pattern recognition and initial categorization while staff provide the nuance, empathy, and contextual understanding that students need.

Defining Structure

With insights in hand, I sketched an updated information structure and user flows. The structure was organized around two axes: user-facing portals and AI-powered backend processes.

AI Processing Layer

Natural Language Processing: Analyzes student request text for intent, keywords, and context

Department Classification: Maps requests to 8 FRCC departments with confidence scoring

Response Generation: Creates contextual draft responses for staff review

The Solution

Design Philosophy: Augmentation, Not Automation

Unlike traditional automation that replaces human workers, this system is designed to augment staff capabilities. The AI handles the repetitive pattern recognition and initial categorization, freeing staff to focus on what humans do best: providing empathy, nuanced judgment, and personalized support

This creates a partnership model where AI and humans learn from each other—AI improves through staff corrections, while staff benefit from AI's ability to process patterns at scale.

A two-part system that combines AI intelligence with human oversight to create better outcomes for everyone.

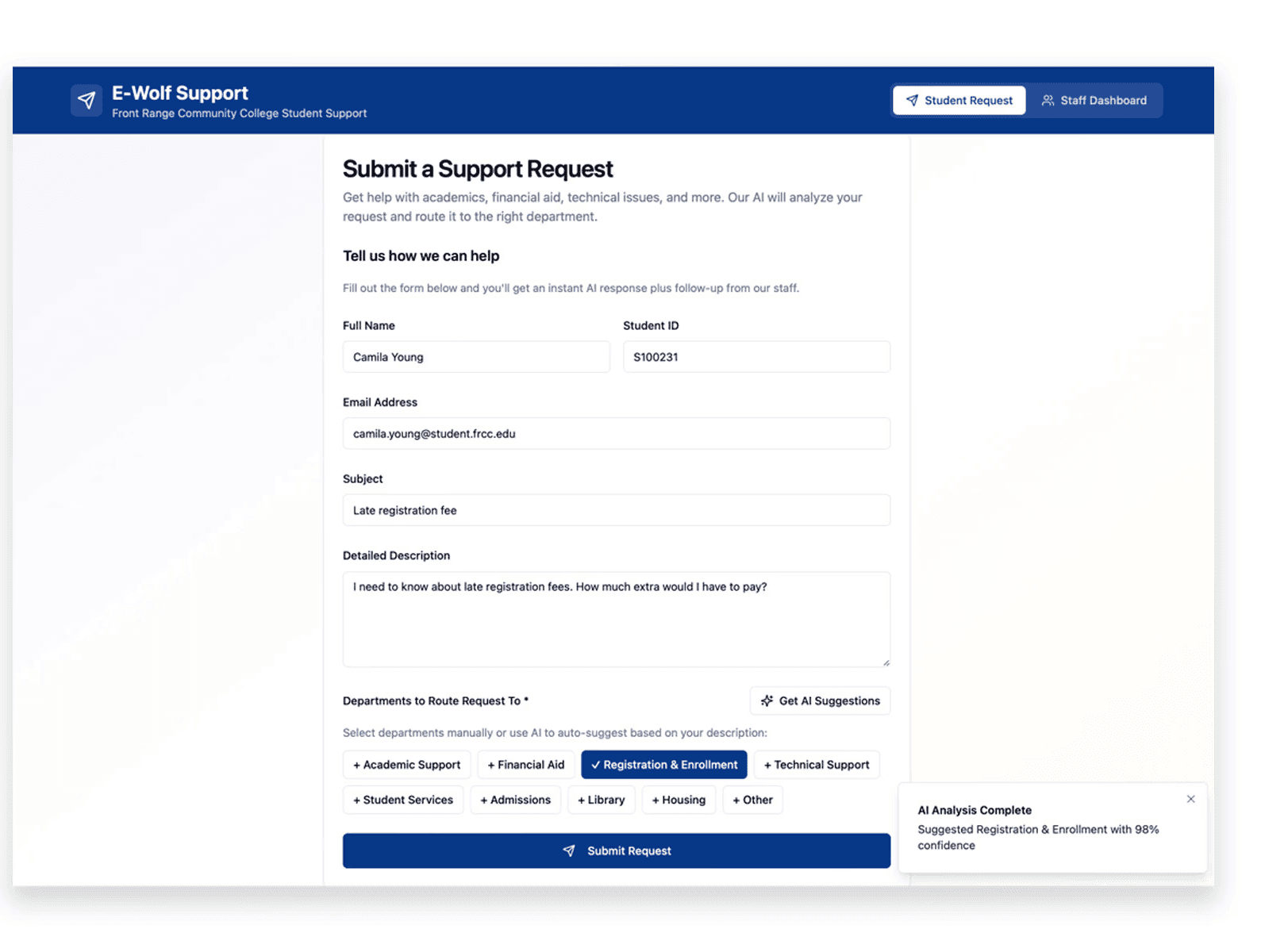

Smart Request Submission (Student View)

On the student submission side, AI analyzes the user’s issue description and suggests relevant departments (e.g., Academic Support, Financial Aid, Registration). This lowers cognitive load for students who may not know which office to contact, while giving staff clean, pre-structured tickets for faster handling. Students can still override suggestions, ensuring transparency and control.

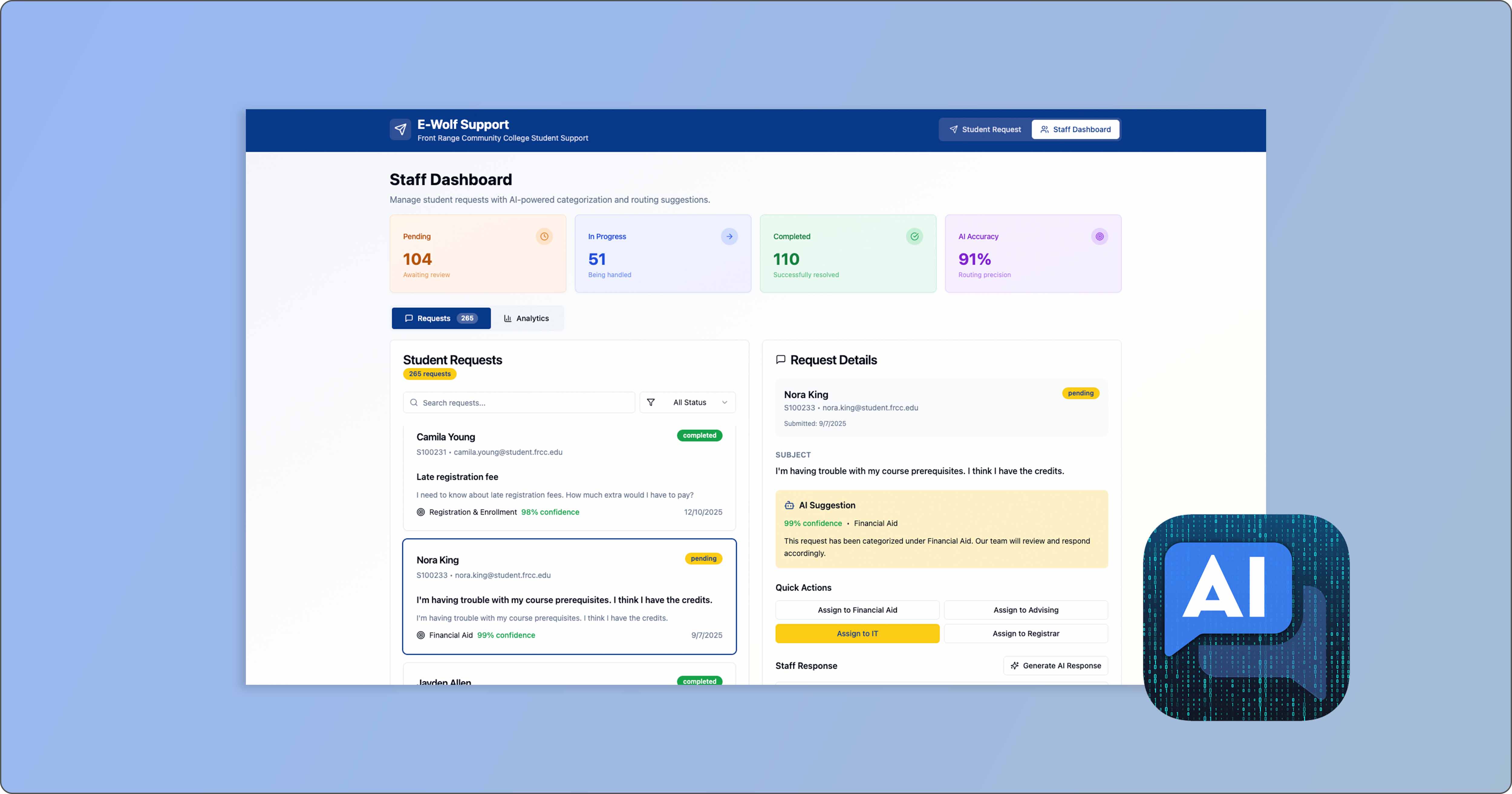

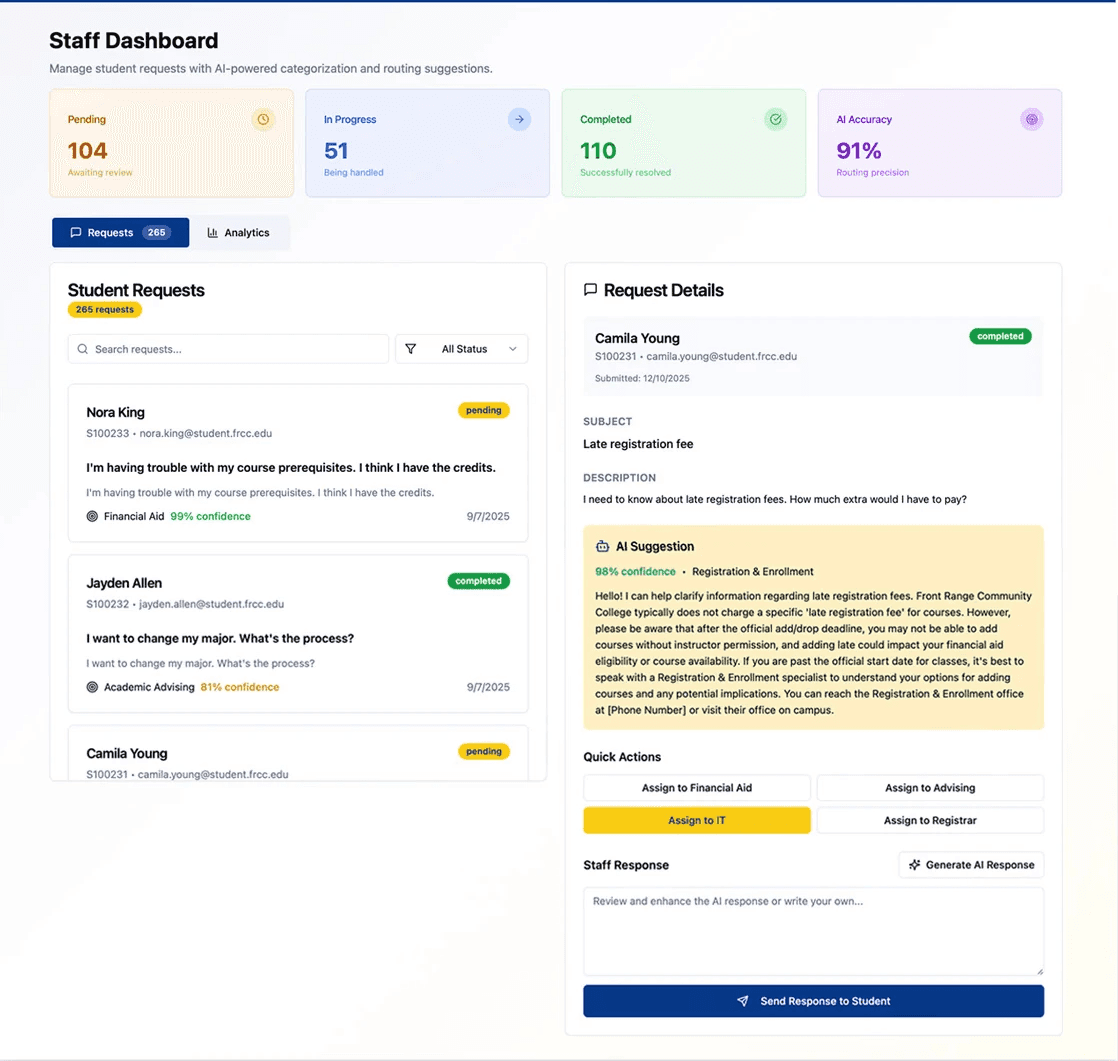

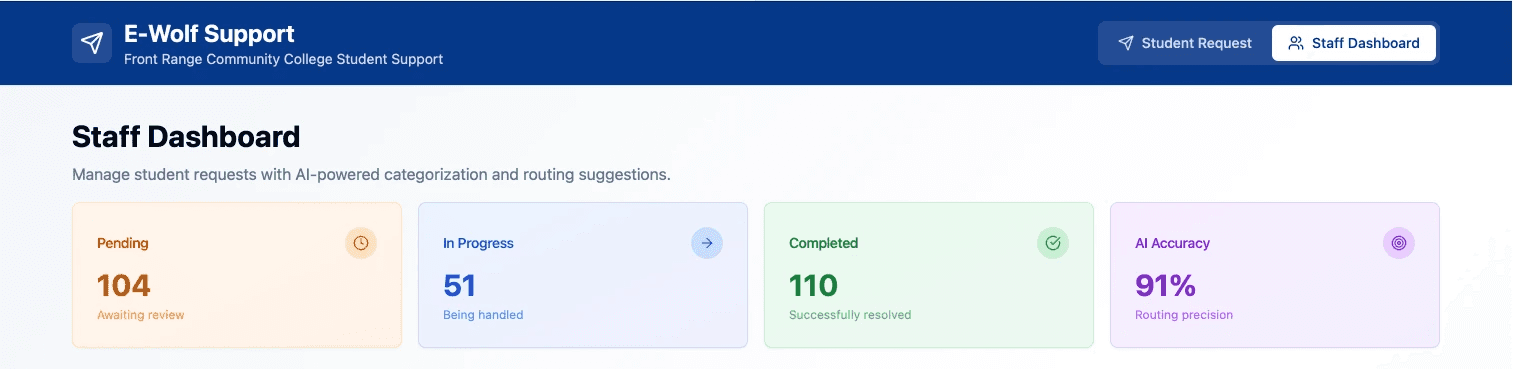

Staff Dashboard & Core Features

The staff-facing dashboard centralizes all student requests and layers AI assistance on top of existing workflows. The interface provides real-time visibility into workload, routing precision, and departmental trends—transforming a previously fragmented support process into a unified, data-informed operations hub.

Key capabilities include:

1. Unified Request Management

Staff can view all pending, in-progress, and completed requests in one place, supported by search, filtering, and clear status indicators. This consolidates email-based workflows and provides a single operational view for all student support activity.

A dedicated AI Accuracy metric on the dashboard tracks model performance in real time, allowing staff to understand how reliably the system classifies requests and where manual oversight may be needed.

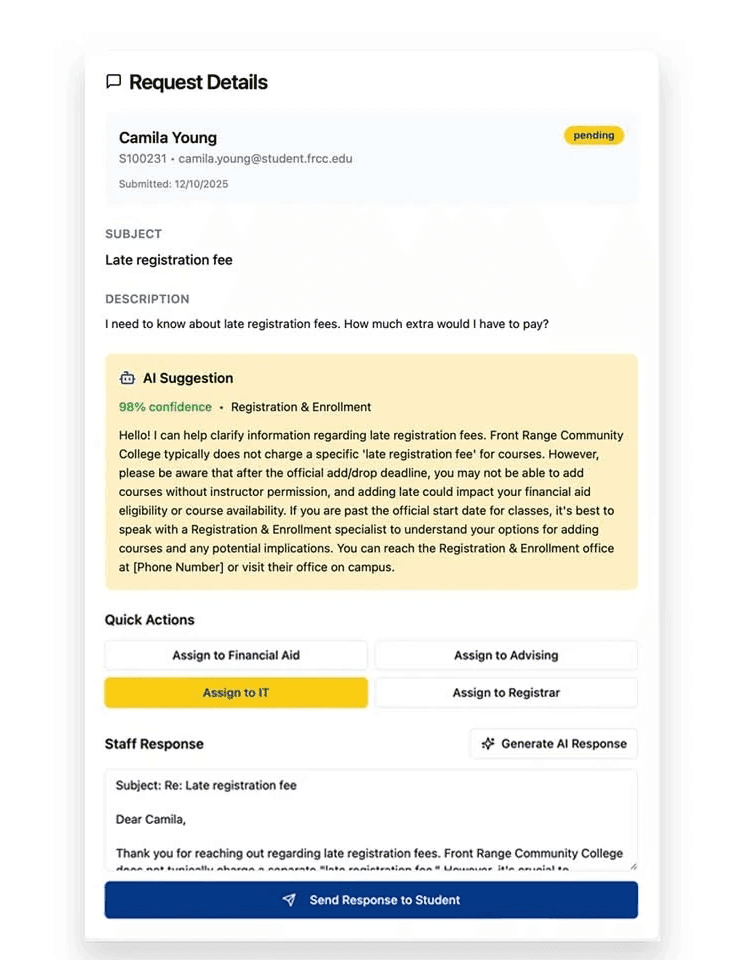

2. AI-Powered Categorization & Routing and AI-Assisted Response Drafting

Every incoming request is analyzed by the model and assigned a predicted department with a confidence score. This dramatically reduces manual triage time and improves routing consistency across 13+ service areas.

For each request, the system generates a draft response aligned with institutional policies and tone. Staff can edit, refine, or regenerate the message before sending—maintaining 100% human oversight while accelerating turnaround times.

AI-assisted response panel provides auto-generated, high-accuracy drafts that staff can review and personalize before sending.

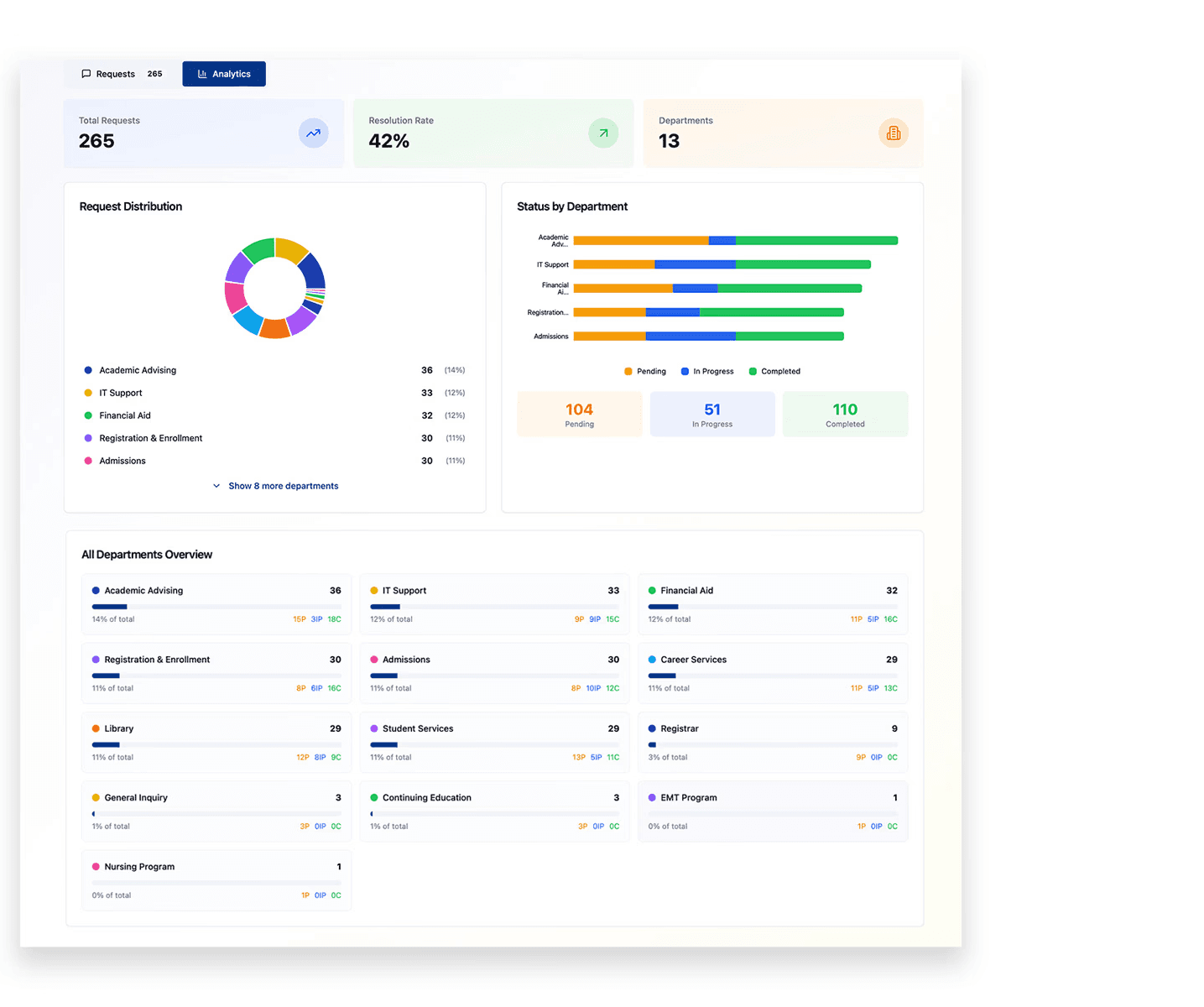

3. Departmental Analytics & Operational Insights

The analytics view highlights request load, distribution, and throughput across all departments. Donut charts and stacked bar graphs make it easy to identify bottlenecks, spike periods, and under-resourced areas.

These insights enable data-driven staffing decisions and help leadership anticipate student needs more proactively.

Testing Results & Projected Impact

Usability Testing Metrics

Testing Methodology

Sample Size: Prototype tested with 300+ authentic student request scenarios sourced from historical E-Wolf support data

Evaluation Criteria: AI routing accuracy, response completeness, confidence score reliability, and staff workflow integration

Stakeholder Validation: System design reviewed and approved by E-Wolf staff members who will utilize the platform upon implementation

Personal Takeaway

Scaling Impact by Simplifying Complexity

The most meaningful insight from this work was seeing how much cognitive load and operational inefficiency came from unclear routing and inconsistent messaging. By simplifying the flow, tightening classifications, and designing AI-assisted tools that actually support staff, I saw how UX can unlock organizational efficiency—not by adding more technology, but by ensuring it’s used thoughtfully. This project strengthened my conviction that the best UX outcomes happen when technology, process, and human judgment work together, not in isolation.